How to create a Word Cloud in R

I have seen Word-cloud

in so many places such as magazines, websites, blogs, etc. however never

thought of making it by myself. I would like to thank R that taught me such a

wonderful technique which everybody would like to learn. I don’t know if there are

any other free options are available to create word-cloud. Let me give a quick

explanation about R first, R is a free source packages and very useful for statistical

analysis. We can use R for various purposes, from data mining to data

visualization. Word-cloud is a tool where you can highlight the words which

have been used the most in quick visualization. If you know the correct

procedure and usage, then Word Cloud is simple in R Studio. Further, a package

called “Word-cloud” is released in R, which will help us to create word-cloud.

You can follow my simple four steps mentioned below to create word-cloud.

Those are new to R or Word Cloud,

I would suggest first install R studio from the link rstudio.com

Also, the

following packages are required to create word cloud in R, so install these

following packages as well:

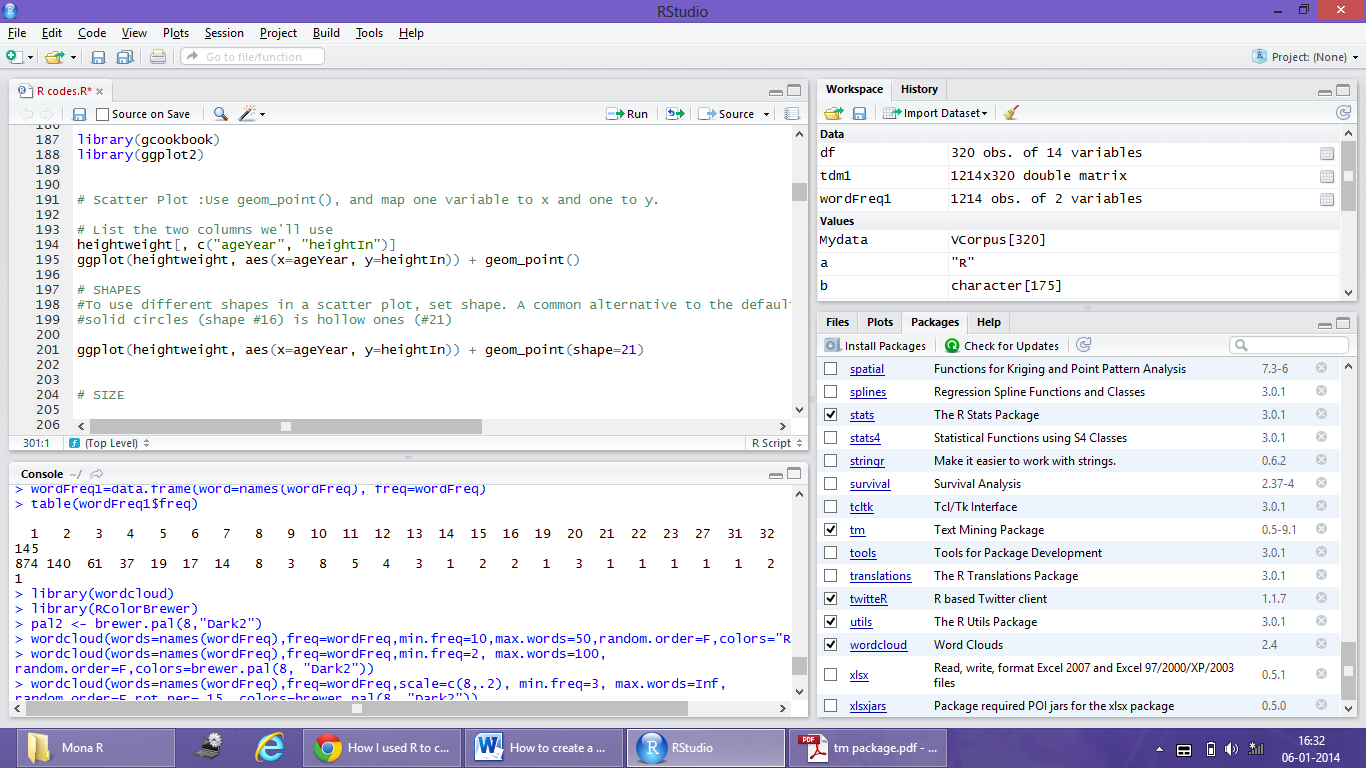

Note: You can see on the right side of the image,

there is an option of the packages you want to install

Step 1->

First we have to install the below package in

R:

library (twitteR)

Once installation is done, we will load the

Tweets data from D drive (that you have saved in your drive) in the below mentioned

codes:

> load("F:\\Mona\\Mona

R\\Tweets.RData")

For the Tweets to convert into a data frame, we

will write the below codes;

>df=do.call("rbind",lapply(tweets,

as.data.frame))

>dim(df)

Step 2 ->

Now install the

below package:

library(tm)

Corpus is collection

of data texts. VectorSource is a very useful command based on which we can

create a corpus of character vectors.

>mydata=Corpus(VectorSource(df$text))

Transformations: Once we have corpus we can modify the

document (for example stopwords removal, stemming, etc.). Transformations are

done via tm_map () function which

applies to all elements of corpus and all transformation can be done in single

text documents.

To clean the data file various commands are

used, which are listed below:

To Eliminating

extra white spaces:

> mydata=tm_map(mydata,

stripWhitespace)

To Convert to Lower Case:

>mydata=tm_map(mydata, tolower)

To remove punctuations:

>mydata=tm_map(mydata,removePunctuation)

To remove numbers:

>mydata=tm_map(mydata,

removeNumbers

Stopwords:

A further preprocessing technique is the removal of stopwords. Stopwords are

words that are so common in a language that their information value is almost

zero, in other words their entropy is very low. Therefore it is usual to remove

them before further analysis.

At first we set up a tiny list of stopwords:

In this we are adding “R” and “online” to

remove from wordlist.

>my_stopwords=c(stopwords('english'),c('R','online'))

>mydata=tm_map(mydata,

removeWords, my_stopwords)

Stemming: Stemming

is the process of removing suffixes from words to get the common origin. For

example, remove ing, ed from word to make it simple. Another example would be -

we would like to count the words stopped and stopping as being the same and

derived from stop.

Step 3 ->

Now install the

below package:

library(SnowballC)

>mydata=tm_map(mydata, stemDocument)

Term-Document Matrix: A

common approach in text mining is to create a term-document matrix from a

corpus. In the tm package the classes Term Document Matrix (tdm)and Document Term

Matrix(dtm) (depending on whether you want terms as rows and documents as columns, or vice versa)

employ sparse matrices for corpora.

>tdm<-termdocumentmatrix mydata="" o:p="">

Frequent

Terms: Now we can have a look at the

popular words in the term-document matrix.

>wordfreq=findFreqTerms(tdm,

lowfreq=70)

>termFrequency=rowSums(as.matrix(tdm1[wordfreq,]))

Now we can have a look at the popular words

in the term-document matrix.

Step 4 ->

Word Cloud: After building a term-document matrix and

frequency terms, we can show the importance of words with a word cloud.

Now install the

below package:

library(wordcloud)

library(RColorBrewer)

pal2=brewer.pal(8,'Dark2")

There are three options; you can apply any

one for different wordcloud colour:

>wordcloud(words=names(wordFreq),freq=wordFreq,min.freq=5,max.words=50,random.order=F,colors="red")

>wordcloud(words=names(wordFreq),freq=wordFreq,scale=c(5,.2),min.freq=3,max.words=

200, random.order=F, rot.per=.15, colors=brewer.pal(8, "Dark2"))

>wordcloud(words=names(wordFreq),freq=wordFreq,

scale=c(5,.2),min.freq=3, max.words=Inf,

random.order=F,rot.per=.15,random.color=TRUE,colors=rainbow(7))

The above word cloud clearly

shows that "data", "example" and "research" are

the three most important words, which validates that the in twitter these words

have been used the most.

o Words: the

words

o Freq: their frequencies

o Scale: A vector of length 2 indicating the range

of the size of the words.

o min.freq: words with frequency below min.freq will not

be plotted

o max.words: Maximum number of words to be plotted. least

frequent terms dropped

o random.order: plot words in random order. If false, they

will be plotted in decreasing frequency

o random.color: choose colors randomly from the colors. If

false, the color is chosen based on the frequency

o rot.per: proportion words with 90 degree rotation

o Colors color words from least to most frequent

o Ordered.colors if true, then colors are assigned to words

in order

Hope this helps.

Thanks for reading………….

.jpg)

.jpg)

When I tried this line wordcloud(words=names(wordFreq),freq=wordFreq,scale=c(5,.2),min.freq=3,max.words= 200, random.order=F, rot.per=.15, colors=brewer.pal(8, "Dark2")),Its showing Error in if (grepl(tails, words[i])) ht <- ht + ht * 0.2 :

ReplyDeleteargument is of length zero.I am new to R,please help me.

This comment has been removed by the author.

ReplyDeleteHi Mona, thanks for sharing this. I got the same error message as the one above. Any ideas why this is?

ReplyDelete